|

|

преди 1 година | |

|---|---|---|

| .. | ||

| README.md | преди 1 година | |

| metafile.yml | преди 1 година | |

| retinanet_r50_fpn_crop640-50e_coco.py | преди 1 година | |

| retinanet_r50_nasfpn_crop640-50e_coco.py | преди 1 година | |

README.md

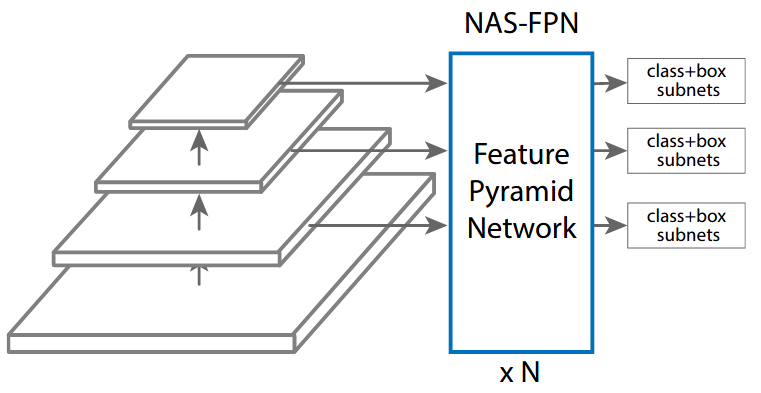

NAS-FPN

NAS-FPN: Learning Scalable Feature Pyramid Architecture for Object Detection

Abstract

Current state-of-the-art convolutional architectures for object detection are manually designed. Here we aim to learn a better architecture of feature pyramid network for object detection. We adopt Neural Architecture Search and discover a new feature pyramid architecture in a novel scalable search space covering all cross-scale connections. The discovered architecture, named NAS-FPN, consists of a combination of top-down and bottom-up connections to fuse features across scales. NAS-FPN, combined with various backbone models in the RetinaNet framework, achieves better accuracy and latency tradeoff compared to state-of-the-art object detection models. NAS-FPN improves mobile detection accuracy by 2 AP compared to state-of-the-art SSDLite with MobileNetV2 model in [32] and achieves 48.3 AP which surpasses Mask R-CNN [10] detection accuracy with less computation time.

Results and Models

We benchmark the new training schedule (crop training, large batch, unfrozen BN, 50 epochs) introduced in NAS-FPN. RetinaNet is used in the paper.

| Backbone | Lr schd | Mem (GB) | Inf time (fps) | box AP | Config | Download |

|---|---|---|---|---|---|---|

| R-50-FPN | 50e | 12.9 | 22.9 | 37.9 | config | model | log |

| R-50-NASFPN | 50e | 13.2 | 23.0 | 40.5 | config | model | log |

Note: We find that it is unstable to train NAS-FPN and there is a small chance that results can be 3% mAP lower.

Citation

@inproceedings{ghiasi2019fpn,

title={Nas-fpn: Learning scalable feature pyramid architecture for object detection},

author={Ghiasi, Golnaz and Lin, Tsung-Yi and Le, Quoc V},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

pages={7036--7045},

year={2019}

}